What is it?

- Developed by Jakob Nielsen

- Helps find usability problems in a UI design

- Small set (3-5) of evaluators examine UI

- independently check for compliance with usability principles (“heuristics”)

- different evaluators will find different problems –evaluators only communicate afterwards

- findings are then aggregated

- Can perform on working UI or on sketches

- I like to use it for baselines

Phases of Heuristic Evaluation

- Pre-evaluation training –give evaluators needed domain knowledge and information on the scenarios

- Evaluation –individuals evaluate –aggregate results

- Severity rating –determine how severe each problem is (priority) –Possible solution

- Debriefing –discuss the outcome with design team

Heuristics

H1: Visibility of system status

H2: Match between system and real world

H3: User control and freedom

H4: Consistency and standards

H5: Error prevention

H6: Recognition rather than recall

H7: Flexibility and efficiency of use

H8: Aesthetic and minimalist design

H9: Help users recognize, diagnose, and recover from errors

H10: Help and documentation

How to Perform

At least two passes for each evaluator

- first to get feel for flow and scope of system

- second to focus on specific elements

Assistance from implementers/domain experts

- If system is walk-up-and-use or evaluators are domain experts, then no assistance needed

- Otherwise might supply evaluators with scenarios and have implementers standing by

Where problems may be found

- single location in UI

- two or more locations that need to be compared

- problem with overall structure of UI

- something that is missing

Example Problem Descriptions

Example 1: Can’t copy info from one window to another

Violates “Recognition rather than recall” (H6)

The Fix: allow copying

Example 2: Typography uses mix of upper/lower case formats and fonts

Violates “Consistency and standards” (H4)

The Fix: pick a single format for entire interface

Severity Ratings

- Used to allocate resources to fix problems

- Estimates of need for more usability efforts

- Combination of –frequency –impact –persistence (one time or repeating)

- Should be calculated after all evaluations are done

- Should be done independently by all evaluators

0 – don’t agree that this is a usability problem

1 – cosmetic problem

2 – minor usability problem

3 – major usability problem; important to fix

4 – usability catastrophe; imperative to fix

Debriefing

- Conduct with evaluators, observers, and development team members

- Discuss general characteristics of UI

- Suggest potential improvements to address major usability problems

- Dev. team rates how hard things are to fix

- Make it a brainstorming session

Results of Using HE

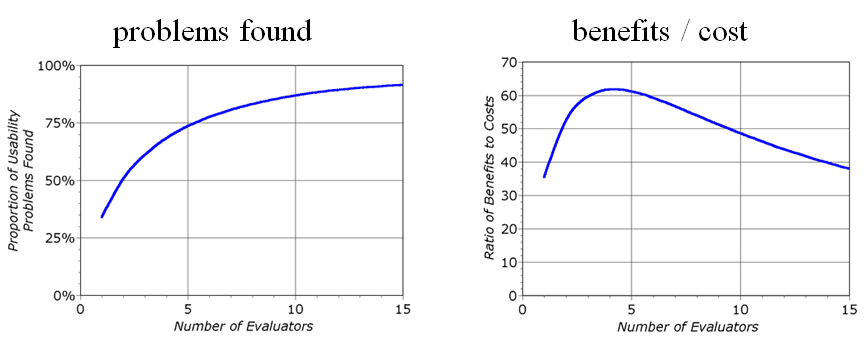

- Single evaluator achieves poor results –only finds 35% of usability problems

- 5 evaluators find ~ 75% of usability problems

- why not more evaluators? 10? 20?

- adding evaluators costs more

- adding more evaluators doesn’t increase the number of unique problems found

Decreasing Returns

Note from Nielsen: These graphs are for a specific example

Learn more:

Nielsen Norman Group: UX Training, Consulting, & Research (nngroup.com)